I’ve owned the Ghost S1 case for a few years and I’ve been using normal air cooling (NH-L12). When I bought the case (think it was through Kickstarter), I opted to get the big top-hat as well for future “needs”. I didn’t end up using the top-hat as the setup worked great and the fans only ramped up during gaming (with some noise) but otherwise very quiet.

I recently upgraded my system to a 12 core Ryzen 5900x CPU and a Radeon 6800 GPU. I found it to be a bit too hot but still manageable with air cooling. I did eventually decide to under volt the CPU and lower the max TDP to 110w and I also added a small top-hat with 2 extra fans (Noctua NF-A12x25 PWM). This worked great and most people should stop here as this is an awesome setup.

But working from home (Corona) made me a bit bored and also I had that extra large top-hat that could fit a radiator, so I decided to take the dive into the unknown and build my first custom water cooling.

Decisions

The main thing I wanted to accomplish was a quiet solution during heavy GPU use, so the current CPU cooling actually worked great already, only missing a better GPU cooling. The main problem was fitting a reservoir and pump somewhere. Two obvious viable solutions are available:

- Getting a pump CPU block with integrated pump. Options like: “Swiftech Apogee Drive II” (expensive but very powerful) or “Alphacool Eisbaer LT” (weaker but enough for smaller loops)

- Get a small enough pump or pump-reservoir-combo to fit somewhere else in the case. One option would be the “Alphacool Eisstation 40 DC-LT” combined with the “Alphacool DC-LT 2”. Pretty much the same pump mentioned above, so a bit underpowered (used by Alphacool’s AIO solutions). If proved too week you could always get the “Alphacool Eisbaer LT” in serial for two pumps in total.

I opted with the second option as the dimensions are so small and I should be able to fit it somewhere. Any GPU block would work, as it should fit (this proved not to be true as I had issues fitting my block). I decided to use soft tubing as it’s easier to use in cramped spaces. I used the somewhat thinner tubing 7.6 mm from Alphacool used by rack servers.

Solution

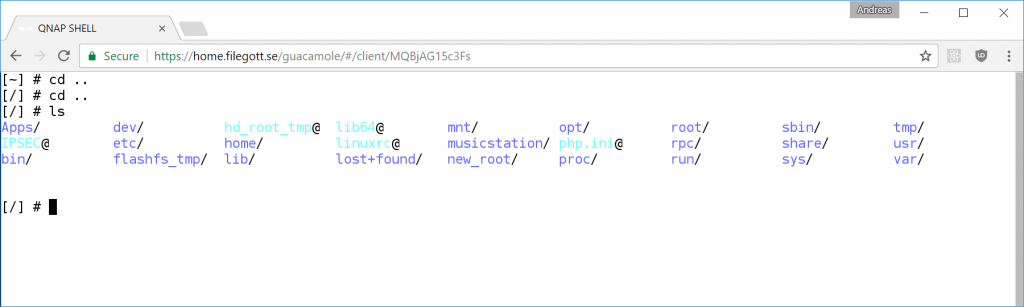

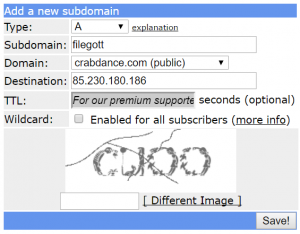

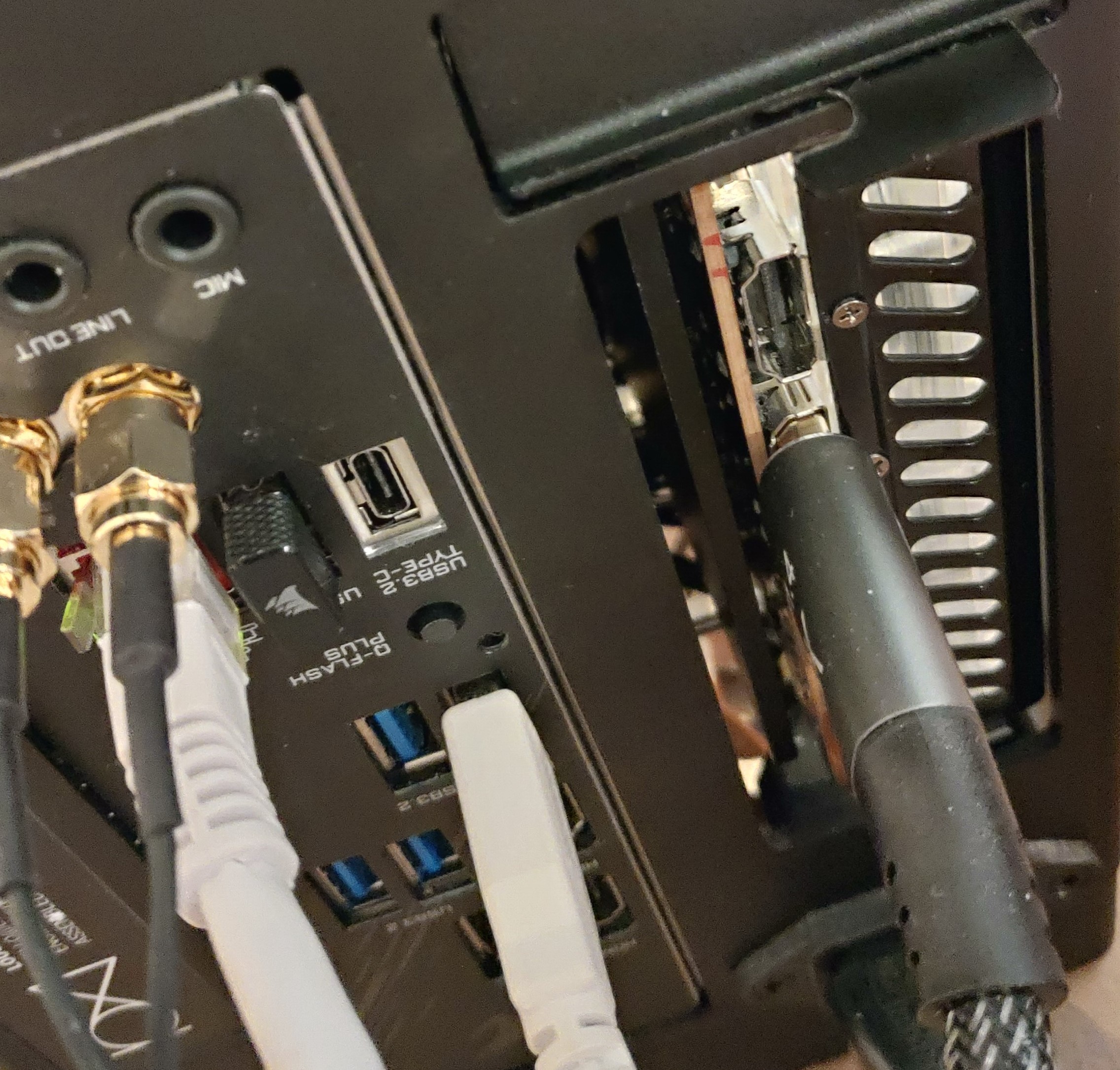

Custom water cooling components used for my Ghost s1 listed below (first version of case with pci-e gen4 riser bought later):

Case: Ghost S1 (first version) with L and S top-hat.

Pump: Alphacool DC-LT 2 – 2600rpm Ceramic – 12V DC – (3 phase sine wave PCB)

Reservoir: Alphacool Eisstation 40 DC-LT

Fans: Noctua NF-A12x25 PWM (4 of them in total)

Radiators: Alphacool NexXxoS ST30 Full Copper 240mm, Alphacool NexXxoS ST25 Full Copper 120mm radiator

GPU block: Alphacool Eisblock Aurora Acryl GPX-A Radeon RX 6800/6800XT/6900XT Reference with Backplate.

CPU block: Barrow LTYK3A-04 V2 (cheap block with low flow restriction)

Tubing: Alphacool tube AlphaTube TPV 12,7/7,6 – black matte 3,3m

Fittings: Alphacool HF compression fitting TPV Metall – 90° rotatable 12,7/7,6mm – Black, Alphacool HF compression fitting TPV Metall – 12,7/6,7mm Straight – Black

Problems encountered

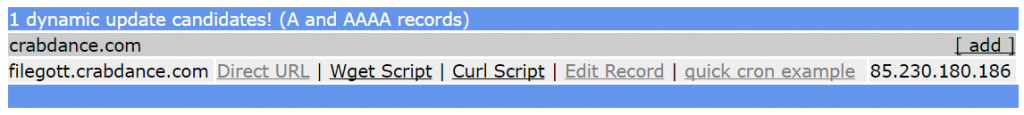

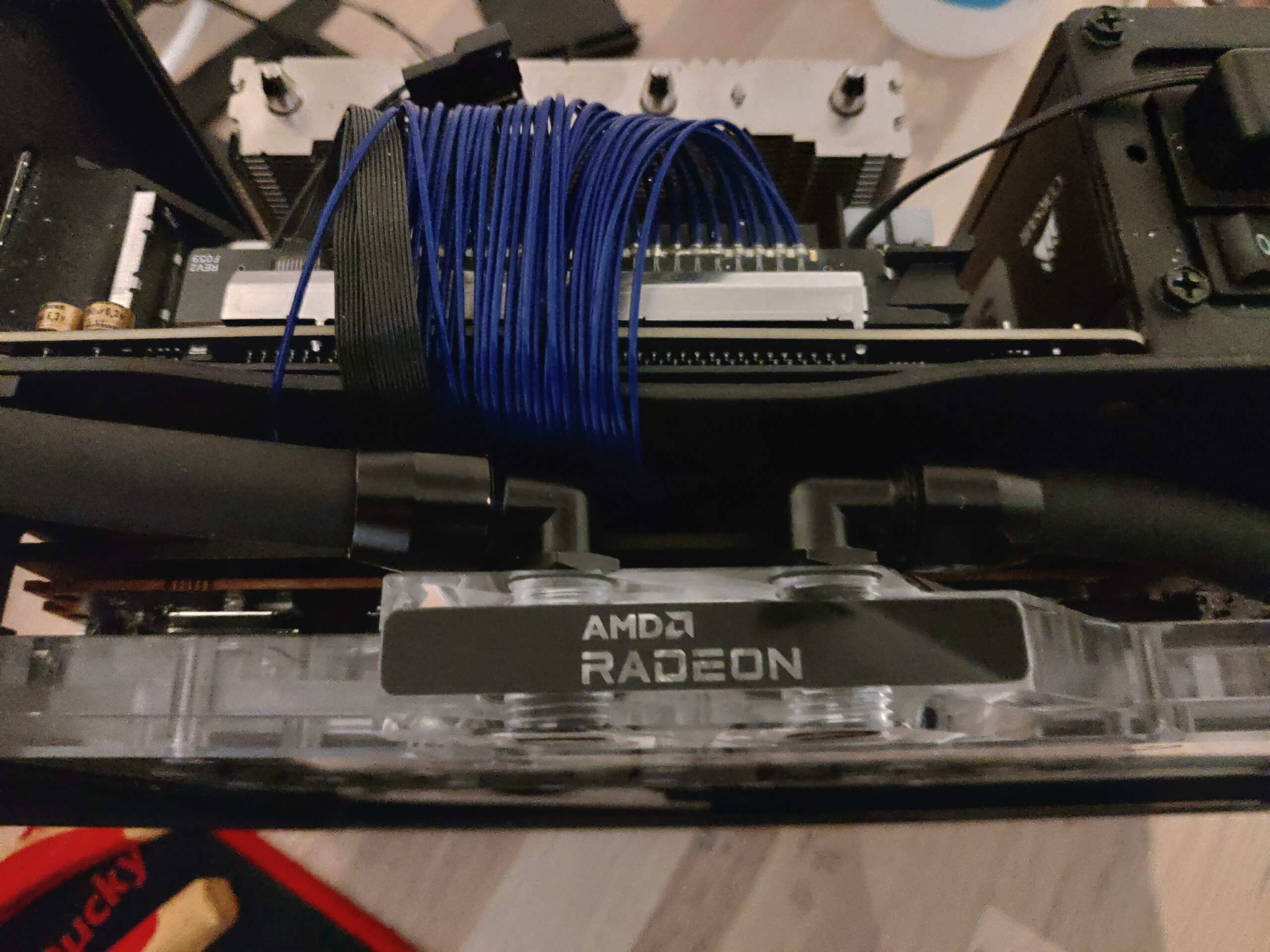

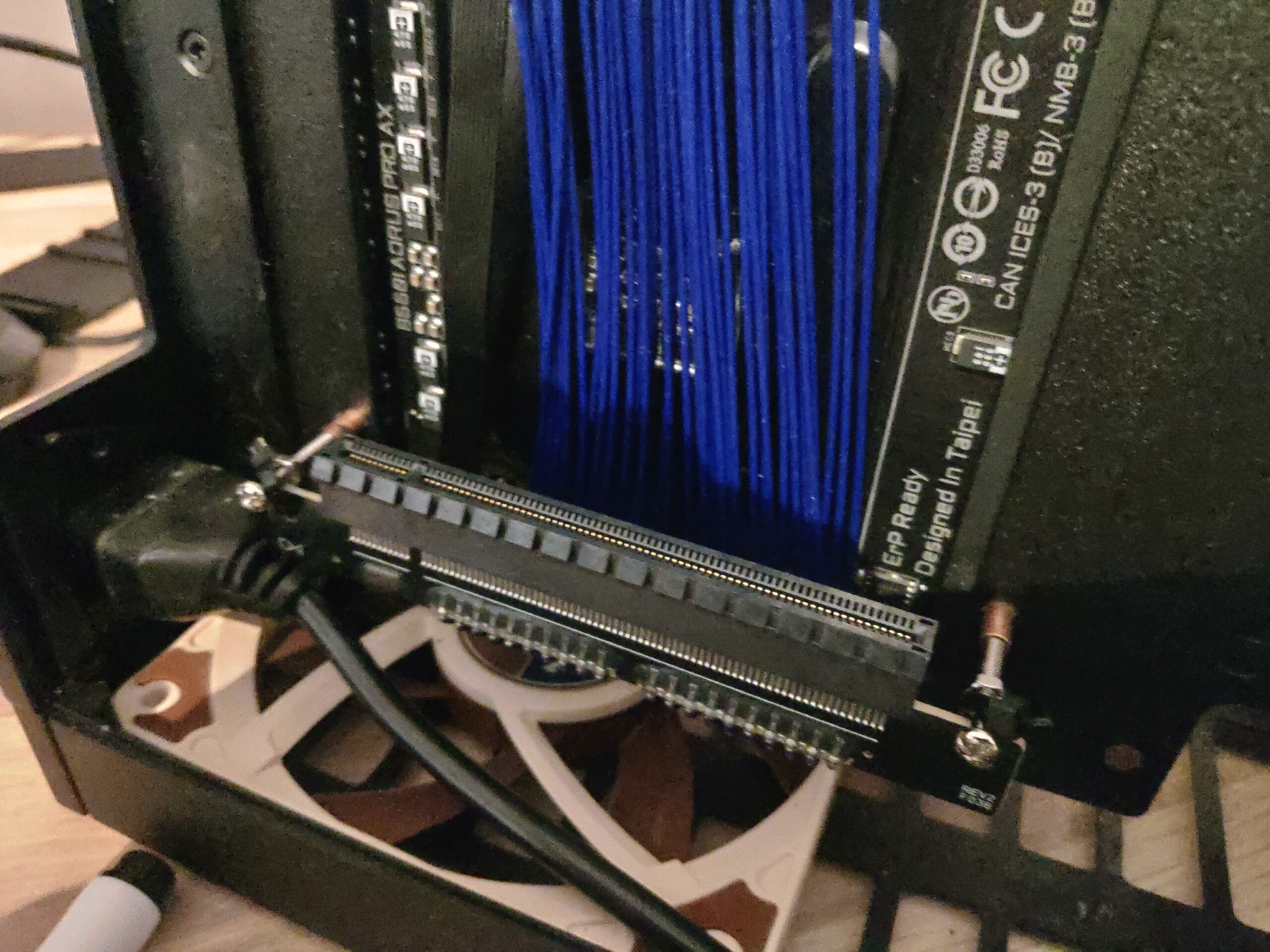

- Haven’t received all the parts needed (~3-4 weeks). My solution is to only water cool the GPU.

I will extend the loop with the extra 120mm radiator and the CPU block at an later date when parts have arrived. - The GPU water block form Alphacool was too thick even with only 19mm high 90° fittings, I could not close the case. The block was also too high (~2-3mm), interfered with top-hat. Easiest solution would be to get another GPU block (EKWB got some nice ones). I modified the Ghost PCI-e riser mounting and added another IO-plate to my GPU. I could then placed the fittings on the inside of the GPU without any issues. This allowed me to move the GPU almost flat against the side wall of the case as well and lower the GPU about 5mm so it wouldn’t interfere with the fan and radiator

I could then place the GPU fittings on the inside without any issues.

Yes, not my best modification, used zip ties and extra long screws:) Maybe I will change this, but it works ok for now. The riser mounting also pushes against the main power inlet but it’s fine. I believe the later versions of Ghost S1 mounts the GPU a bit lower so this lowering is not needed then.

New IO-plate for GPU worked great, and no need to actually modify case or GPU permanently. I just took an old IO-plate I had laying around and drilled 4 holes in it (kept the old one if ever needed). Now (2021-03-30) another flat GPU terminal for my water block is available to buy from Alphacool (~15€), so my modification above is not be needed if you buy that extra terminal. - Pump and reservoir combo did not fit behind the GPU as first planned, my Radeon 6800 is too long. Solution was to place the pump reservoir combo in top-hat, fit perfectly next to the radiator.

- I managed to “lose” the cables attached to the pump when I was messing around with the case. Solution was to just order a new pump (30€) as trying to open the pump without damage it and solder the disconnected cables proved to difficult for me. Be careful when handling the Alphacool pump and don’t jerk the connections to it!

Conclusion

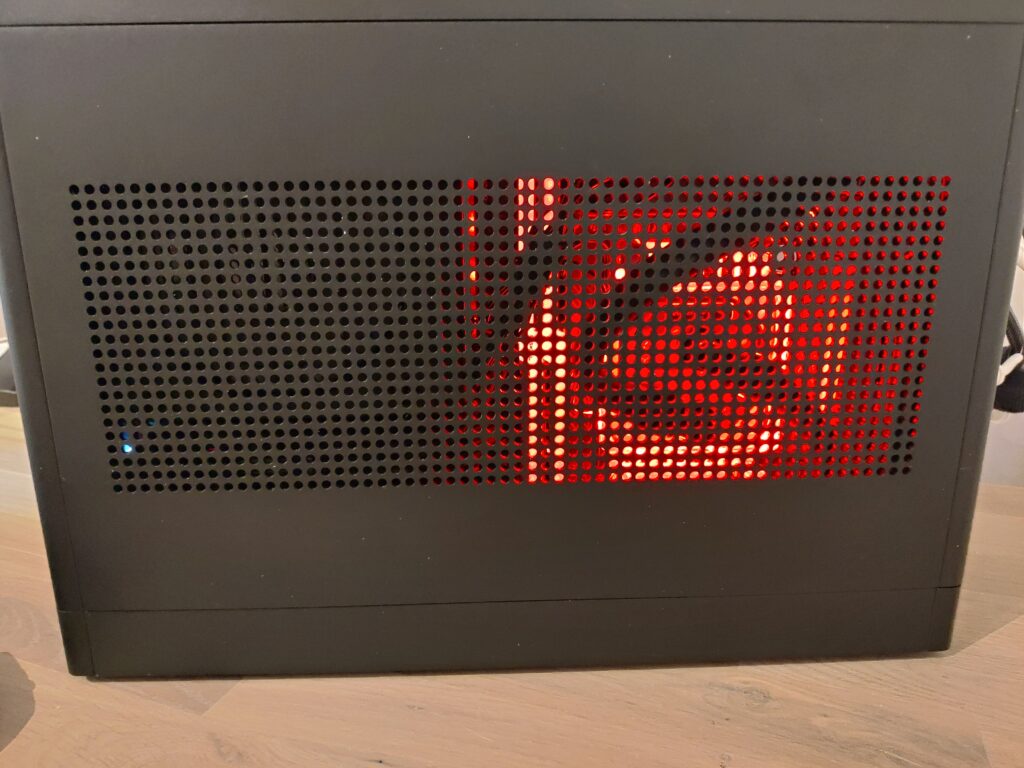

The solution is temporary and only the GPU is water cooled, so still using the NH-L12 for cooling the CPU, waiting for all the parts to complete the full loop. But works awesome like this too. It will be interesting to see the thermals with a complete loop CPU, GPU and 2 radiators (240 and 120mm).

It’s now a larger case than before because the use of large and small top-hat, but it’s still very small and quiet when compered to other solutions. The NCASE M1 case is an option as well (cheaper if you don’t own the Ghost S1 already) but I really like the Ghost S1 case. Very pleased with my current setup and probably even better when the full loop is complete.

I added another zip tie at the corner of the GPU to stabilize the GPU a bit more because of the PCIe riser mounting modification I did.

Update (2021-04-16): Got all the parts and have now completed the loop and it works great 🙂

When doing some Ethereum crypto-mining the GPU temperature is about 50 degrees Celsius without a fan-grill (fans spinning around ~950 rpms). Temperatures goes up to 55 degrees Celsius when fan grill is on!. I’ve read that putting the fans in a pull configuration just next to the fan-grill might fix this issue. so I might try this at an later date.

I’m not a miner, I’m just putting the GPU to use when possible so I can get some extra cash for my hobby projects.